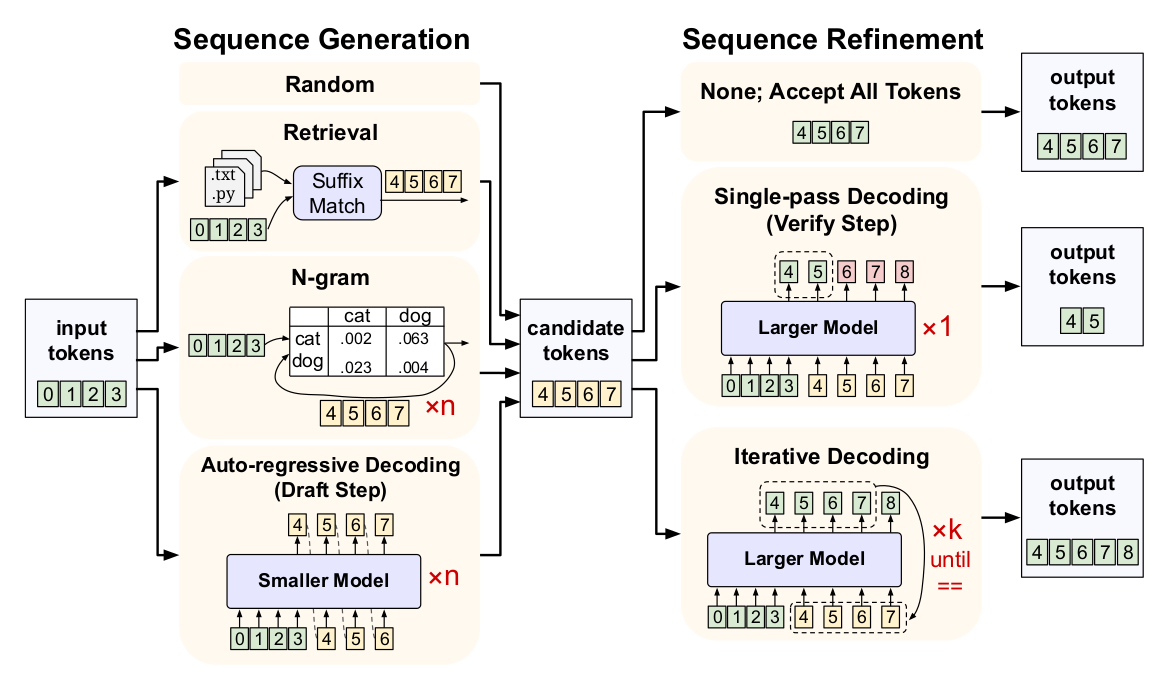

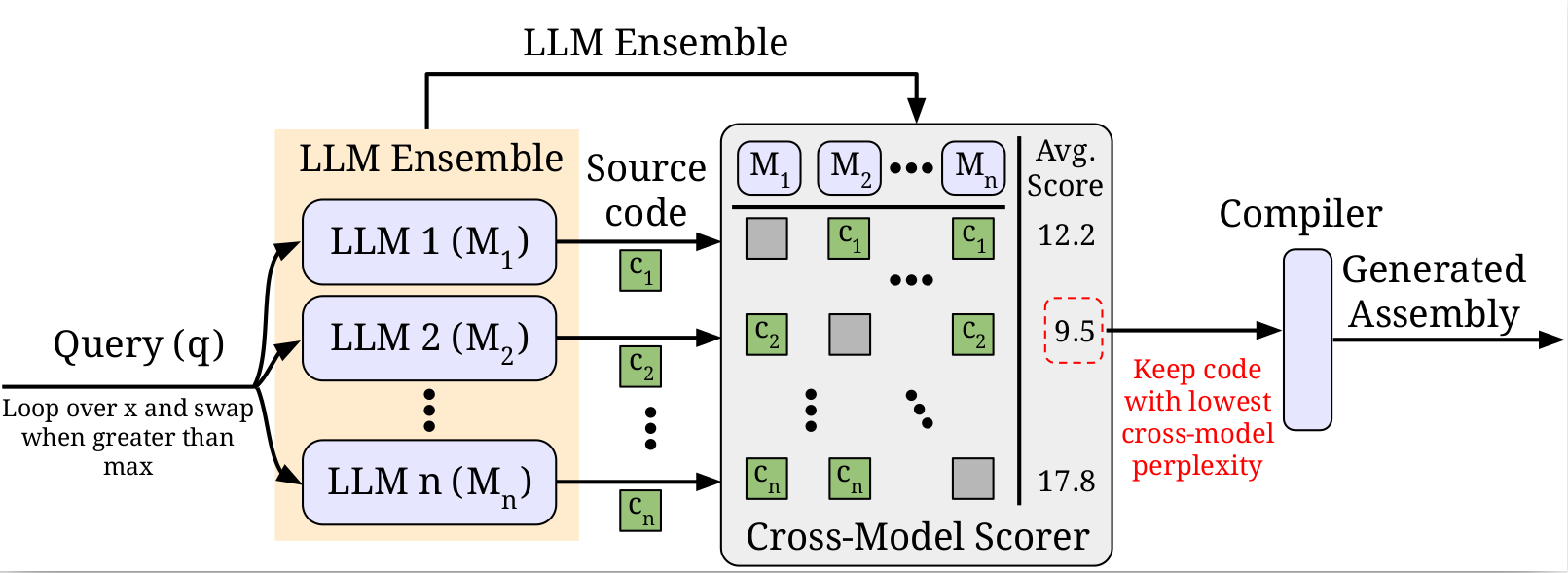

My research spans the intersections of deep learning, hardware architecture, and computer networks, with a particular focus on developing efficient algorithms and systems for deploying artificial intelligence. I have extensive experience in optimizing deep neural networks (DNNs) through various approaches, including systolic array implementations, sparse architectures, and quantization techniques. My work has contributed to both theoretical frameworks and practical implementations for accelerating DNN inference on edge devices, especially in distributed network environments. Recently, I have expanded my research to address efficiency challenges in emerging AI systems, particularly Large Language Models (LLMs) and multi-modal architectures, as evidenced by my work on speculative decoding and transformer optimization techniques.