LLMs for Programming Languages

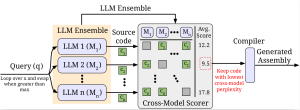

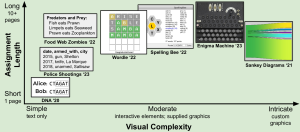

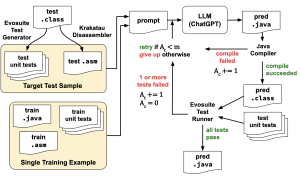

My research explores the use of Large Language Models (LLMs) for programming language tasks, particularly focusing on code translation, generation, and verification. Recent projects include using instruction-tuned models like ChatGPT as effective Java decompilers, producing more readable source code compared to traditional software-based approaches, and developing robust strategies to detect malicious or incorrect code through cross-model validation. By combining model-based methods with multi-model consensus, this work aims to enhance both the quality and security of AI-generated code.

Relevant Publications

Beyond Trusting Trust: Multi-Model Validation for Robust Code Generation