Computer Science Education

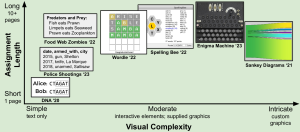

Large Language Models (LLMs) present both opportunities and challenges for integration into CS education. While these tools can aid students in debugging code, clarifying concepts, and generating test cases, they also risk undermining authentic learning when used to automatically solve programming assignments. My current research explores how assignment structures—particularly multi-part problems, visual tasks, and context-sensitive scenarios—can be designed to resist direct LLM-generated solutions. By analyzing students’ interactions with LLMs in realistic educational settings, this work seeks effective strategies to leverage generative AI’s educational benefits while ensuring genuine student learning and engagement. More long term, I am interested in seeing how we can use these tools to help students better reflect on the work they have done.