Adaptive Inference

Most research areas for efficient inference focus on reducing the amount of computation performed by each sample (i.e., image in a computer vision task). In contrast, adaptive inference methods aim to vary the amount of computation required based on sample difficultly. This leads to at least two interesting questions:

- How to structure a DNN to efficiently vary the amount of computation?

- What mechanism should be used to determine how much computation is needed?

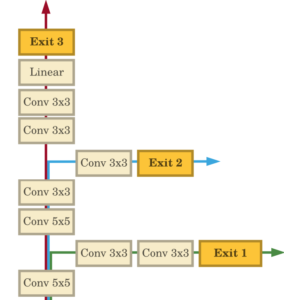

BranchyNet was one of the first papers that employs an adaptive inference strategy for DNNs by modifying the structure of DNNs by added multiple exit points. As many types of DNNs have been proposed over time (e.g., with the addition of skip connections), corresponding adaptive inference methods have also been proposed to take advantage of these new structures [1, 2, 3, 4].

Less work has been paid to the second question. Namely, designing mechanisms that are better at predicting the difficulty of samples.

Relevant Publications

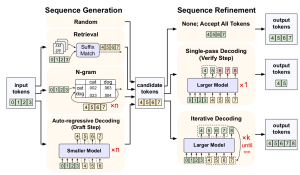

Speculative Decoding and Beyond: An In-Depth Review of Techniques

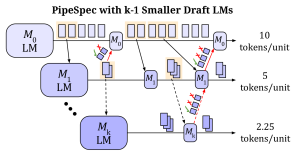

PipeSpec: Breaking Stage Dependencies in Hierarchical LLM Decoding

Bradley McDanel, Sai Qian Zhang, Yunhai Hu, Zining Liu

ACL Findings, 2025.

preprint

code

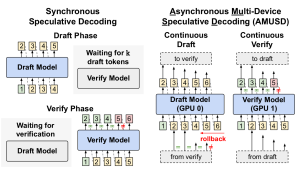

AMUSD: Asynchronous Multi-Device Speculative Decoding for LLM Acceleration

Bradley McDanel

IEEE International Symposium on Circuits and Systems (ISCAS), 2025.

preprint

paper

code

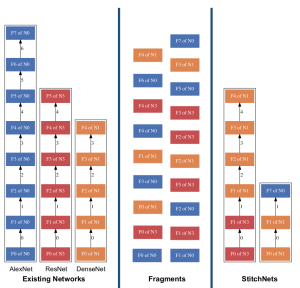

StitchNet: Composing Neural Networks from Pre-Trained Fragments

Surat Teerapittayanon, Marcus Comiter, Bradley McDanel, H. T. Kung.

IEEE International Conference on Machine Learning (ICMLA), 2023.

preprint

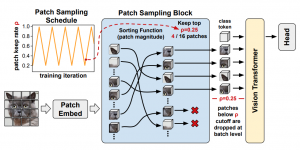

Accelerating Vision Transformer Training via a Patch Sampling Schedule

Bradley McDanel, Chi Phuong Huynh

IEEE International Conference on Machine Learning (ICMLA), 2023.

preprint

code

FAST: DNN Training Under Variable Precision Block Floating Point with Stochastic Rounding

S. Zhang, B. McDanel, H. T. Kung

28th IEEE International Symposium on High-Performance Computer Architecture (HPCA-28), 2022.

preprint

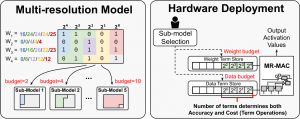

Field-Configurable Multi-resolution Inference: Rethinking Quantization

S. Zhang, B. McDanel, H. T. Kung, X. Dong

26th ACM International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS), 2021

preprint

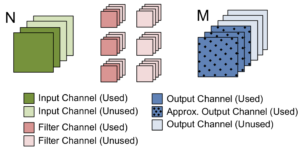

Incomplete Dot Products for Dynamic Computation Scaling in Neural Network Inference

B. McDanel, S. Teerapittayanon, H. T. Kung

International Conference On Machine Learning And Applications (ICMLA), 2017

paper

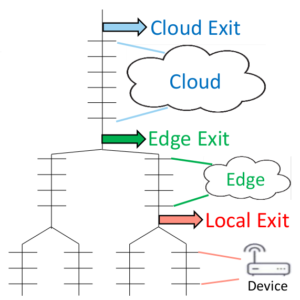

Distributed Deep Neural Networks over the Cloud, the Edge and End Devices

S. Teerapittayanon, B. McDanel, H. T. Kung

International Conference on Distributed Computing Systems (ICDCS), 2017

paper | code

BranchyNet: Fast Inference via Early Exiting from Deep Neural Networks

S. Teerapittayanon, B. McDanel, H. T. Kung

International Conference on Pattern Recognition (ICPR), 2016

paper

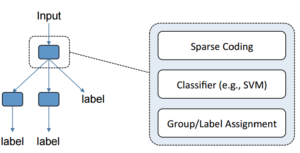

Sparse Coding Trees with Application to Emotion Classification

H. Chen, M. Z. Comiter, H. T. Kung, B. McDanel

IEEE Workshop on Analysis and Modeling of Faces and Gestures (CVPR Workshop), 2015

Best paper award

paper